Day 64: Experimenting with Dictation & Speech Recognition

Published on

While working through my study session a few days ago about identifying a11y issues for people who use voice input to navigate the web, I ran across an interesting tweet thread started by Rob Dodson:

A11y experts, I have a voice access question: If you have a link with the text "learn more" but you put an aria-label on it so it says "learn more about shopping" are you still able to click the link by saying "click learn more"?

— Rob Dodson (@rob_dodson) February 1, 2019

So funny that this stuck out on my feed last night as I was finishing up my blog post! Admittedly, it sparked my curiosity about how ARIA affects voice dictation users, and spurred me on further to start testing with the different platforms that are available to people who need full voice navigation.

Things I accomplished

Permalink for "Things I accomplished"- Experimented with Apple Dictation and Siri combination (with brief use of VoiceOver)

- Experimented with Windows speech recognition in Cortona company

- Attempted to write some of this blog post with a combination of Apple Dictation and Windows Speech Recognition.

What I learned today

Permalink for "What I learned today"Disclaimer: I am not a voice input software user. This is VERY new to me, so lean very lightly on my "experience" and what I'm learning.

Learning curve and first-use exposure aside, Apple's Dictation feature didn't seem to have enough reach or intuition to do the multitasking I wanted to do. Additionally, I found that I had to keep reactivating it ("Computer...") to give commands. There was no continuity. Apparently, I'm not the only one disappointed with the lack of robustness Dictation + Siri has to offer. Read another person's feelings in Nuance Has Abandoned Mac Speech Recognition. Will Apple Fill the Void?

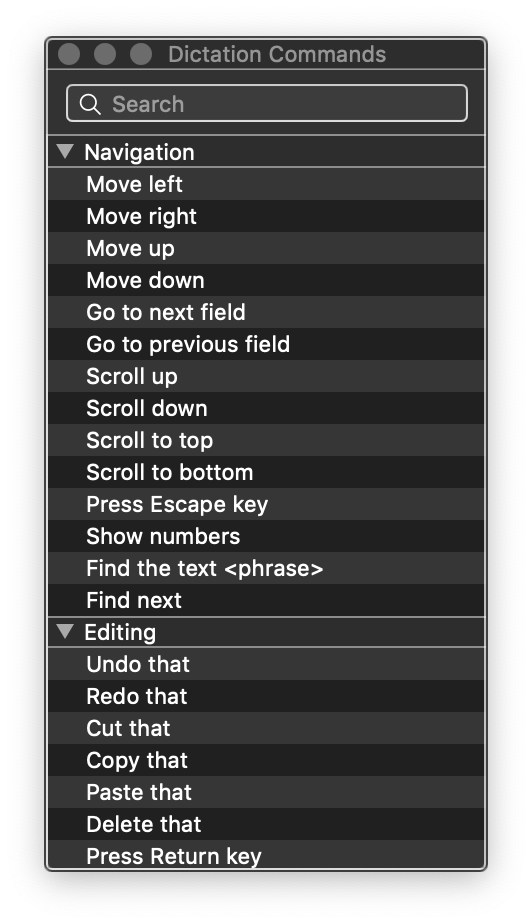

Here is Apple's dictation commands dialog that I had off to the side of my screen as I worked with it enabled:

The number system to access links appears to be universal across dictation software. I found it in Dictation and Speech Recognition. And I know Dragon has it, too.

Windows Speech Recognition was just as awkward for me. However, I felt it was built more to include navigating my computer, and not solely dictation of documents. Microsoft has a handy Speech Recognition cheatsheet.

Here is the Windows Speech Recognition dock, that reminds me of what I've seen online with Dragon software:

I found myself struggling to not use my keyboard or mouse. If I had to rely on either of these OS built-in technologies, I think I'd definitely invest in something more robust. Eventually, I want to get a hold of Dragon NaturallySpeaking to give that a try for comparison.

For people who can use the keyboard along with their speech input, there are keyboard shortcuts to turn listening on and off:

- Apple: Fn Fn

- Windows: Ctrl + Windows key

By far, this was the hardest thing for me to test with. I think that's due to the AI relationship with me and my computer. It was nothing like quickly turning on another piece of software and diving right in. Instead, it required that it understand me clearly, which didn't happen often. Ultimately, it will take some time for me to get comfortable with testing my web pages with speech recognition software. Until then, I'll be heavily leaning on other testing methods as well as good code and design practices.

As a final note, based on the above statement, I think purchasing more robust speech recognition software, like Dragon, would be a harder sell to my employer when it comes to accessibility testing. It's a hard enough sell for me to want to purchase a Home edition license for my own personal use and testing freelance projects.